How do we handle hallucinations & inaccuracies?

In general, how do we deal with non-deterministic machines?

We are working on some sophisticated approaches to address inaccuracies and hallucinations. We believe that these issues will reduce as the models become better - but we will never have 100% deterministic AI systems just as we don’t have 100% deterministic human behavior.

While we work on incrementally reducing the occurrences, SocratiQ currently offers 4 easy ways for teachers and students to deal with the current state of affairs:

Preemptive teacher guidance through intentions - SocratiQ inquiries and quizzes carry with them the ability for teachers to both specify and edit intentions. These intentions govern SocratiQ’s feedback, followup and generation mechanisms.

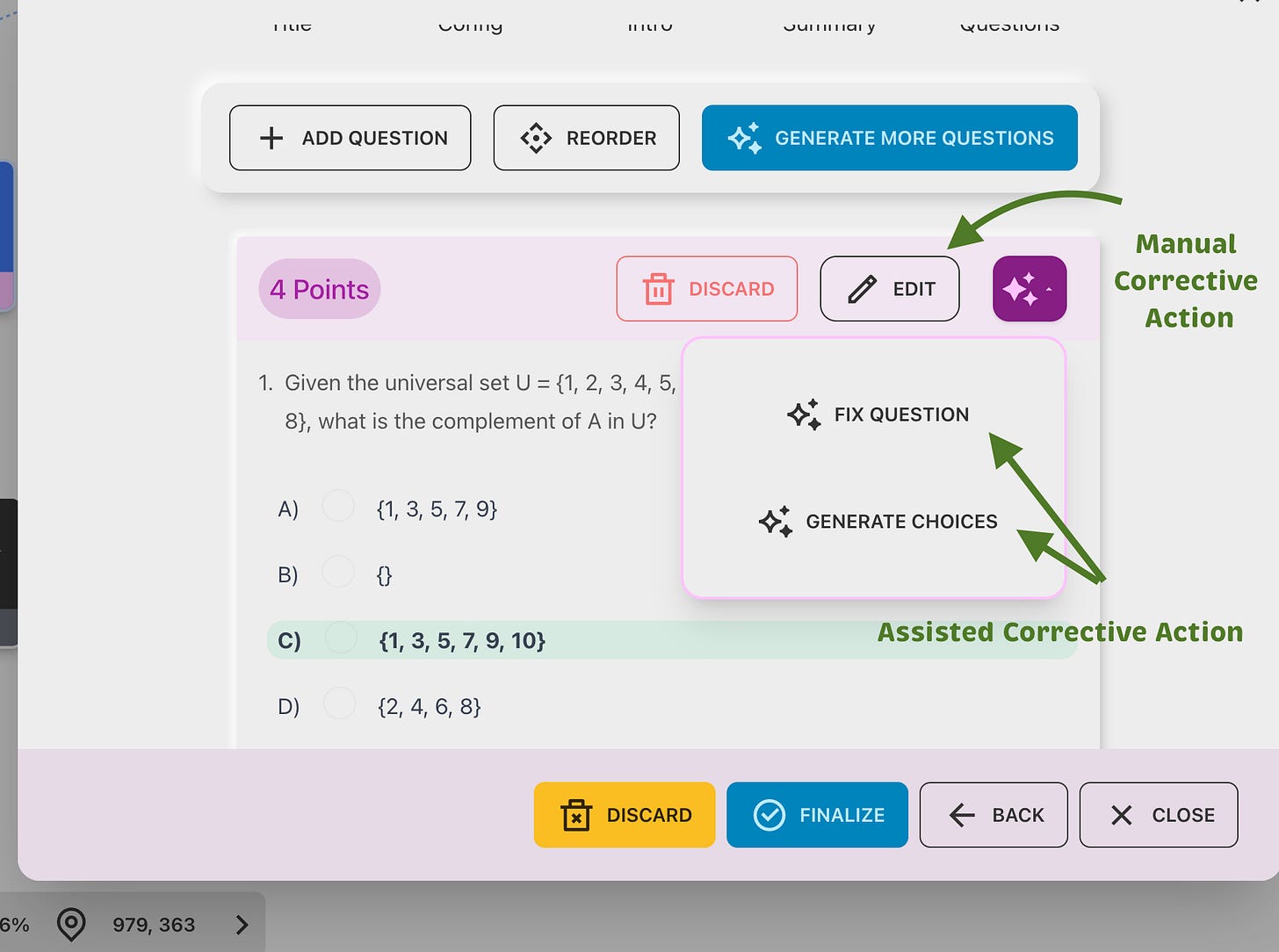

Preemptive Guidance Corrective, assisted, teacher overrides - Teachers can correct the SocratiQ generated quiz questions, choices and inquiries and topics or have SocratiQ regenerate them after reviewing them for accuracy and suitability.

Retrospective teacher intervention - Teachers can add corrective notes and or guidance as addendums to generated content and student responses to offer clarifications / corrections.

Student Education: Whenever students generate a guide or a lesson in a SocratiQ exploration for the first time, they are presented with this dialog to make sure they understand that they are dealing with a extremely useful but not completely reliable system.

We believe that AI assisted learning offers incredible learning opportunities and facilities for students, reduces teacher workload while making them scale up the creative ladder and significantly reduces school IT expenses.

By making sure that teachers and students are aware of the non-deterministic behavior and offering natural ways to deal with the pitfalls, we believe we have built a system that reaps maximum benefits from this wonderful technological advancement.

What are your thoughts? Are we doing enough? How can we do better?